- Messages

- 16

- Location

- Pennsyltucky

So I’m going to try something a little different here~

As I’m sure many of you are no doubt aware, I’ve been developing my own audio streaming driver for the Mega Drive since around early August of this year called SONAR. Progress on it has been fairly steady as of late and I’m hoping to have a proper source release of the driver ready by mid-January (that’s not a promise though!). However, I’m not exactly keen on waiting until then to put the word out about it beyond a few littered messages on various Discord servers. Instead, I would like to try a new post format out.

This is gonna be an ongoing thread where I can share my journey of developing this driver with the community and allow others to voice their feedback as time goes on. Even though I call this a dev diary, the format of this thread is really more like a blog crossed over with a discussion post. This format could either turn out to be really great or really messy, but I’m optimistic about it.

I will go ahead and note right up front that this post is gonna be a lot longer than any of the subsequent updates I write since there’s a pretty sizable timeline to cover up to this point. I promise I’ll try to keep this interesting. That said, this post is going to assume a fair bit of prior knowledge on PCM playback and MD sound drivers, so if you’re not versed in those subjects, I can’t guarantee this will all make sense to you.

Oh, and uh, don’t expect super proper English or an overly professional tone from this post, either. As I write this, It’s a very late Sunday night following an absolutely dreadful shift at work, so I’m pretty much going to write this the same way I would explain it to someone if I was just giving them an off-the-cuff summary of my journey thus far. I’ve got enough of the professional essay writing shit to do in college anyway and I get the sense I’d just be wasting everyone's time if I approached this with any other tone but a casual one.

Without further ado, let’s get into it.

Back in May of this year, I got the bright idea to try and make my own flavor of 1-bit ADPCM. I don’t fully remember exactly what drove me to do this, but nonetheless, I thought it was worth pursuing. I can, however, confirm that the idea of building a driver dedicated to streaming full songs was nowhere on my radar at the time. I was simply trying to put together a format that would act as an extremely lightweight alternative to 4-bit DPCM that still provided a reasonable level of quality; something you could use the same way you might use MegaPCM or DualPCM, but you know… shittier.

I was also doing this as a means to teach myself EASy68K. I’ve been out of practice with high-level languages for quite some time now, so I thought I could use EASy68K to just coast along with the knowledge I already have of 68k asm to write tools I’d need. This was, in hindsight, really fucking stupid, since the environment EASy68K provides is fairly limited and I’m in a position now where I kinda have to learn a higher-level language anyway (more on that later). I could’ve had a head start on that, but I chose not to. Dumb.

Anyway, I’m not gonna go into detail about what the theory behind this 1-bit format was or how unbearably slow the encoder was because it’s not super relevant to SONAR. All you really need to take away from this time period is that it was called Variable Delta Step (VDS for short) and that it was a colossal failure. I was pretty put off-put by the fact that my experiment had essentially boiled down to waiting 45 minutes at a time for the encoder to spit out something that sounded like a broken fax machine made angry love to a jammed floppy drive, so I pretty much chucked the project in the bin after working on it for about 3 days straight.

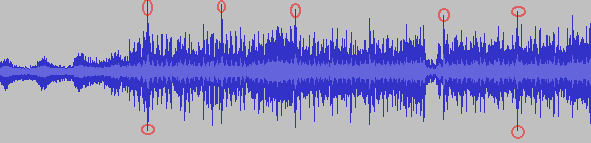

By that same token, I wouldn’t say it was a totally pointless endeavor. While working on VDS, I was primarily using Audacity to convert my sound clips from signed 16-bit PCM to unsigned 8-bit PCM. However, there was something I noticed about the conversions that puzzled me quite a bit: the 8-bit samples sounded noisy, even during passages of complete silence. Now, some noisiness is to be expected with the move to a lower fidelity format, and I did understand that going into it.

However, at some point, the thought crossed my mind that maybe Audacity was simply unsigning and then chopping off the lower 8-bits of the sample data and calling it a day, rather than applying any sort of rounding rules to reduce noise in quieter passages. I decided to investigate it further, and sure enough, that’s exactly what it was doing. I responded by showing the program my favorite finger and writing an EASy68K script to handle the conversion properly, and the results were a fair bit cleaner in terms of signal noise.

Sometimes you find small victories in your failures, even if you don’t see them right away.

Even though I had thrown in the towel on VDS, I was still hungry to do something PCM-related on the Mega Drive. The idea of doing an audio streaming driver sorta just came to me from a place of my earlier ambitions when I first joined SSRG years ago. A streaming driver is something I had wanted to do back then even before I knew a single Z80 opcode. So, without much of a second thought, I kinda went “fuck it” and decided to see how viable this dream from early on really was.

I knew going into this that no matter what PCM format I settled on, my driver was likely going to require the use of a cartridge mapper to be anywhere near viable for a full game OST, and it was something I personally felt okay with. To me, it didn’t seem like it was all that different from what it ended up getting used for in the only MD game to contain a mapper (SSFII), and when looking at the ratio of how much space music took up vs. every other asset on CD-based games of the era, it seemed pretty reasonable by comparison. I understand some people will disagree with that perspective, but this is just how I personally feel about it.

That said, I still wanted to find a PCM format that would make the most out of every byte without sounding like crap. Having learned my lesson with VDS, I decided that creating a format from scratch was not even nearly within my depth, so I needed to find a pre-existing one that was well-documented, had encoding tools that still worked, and source code for a decoder that I’d be able to reference. However… it turned out that there are very very few viable PCM formats out there that meet these criteria. COVOX ADPCM was a tempting option since it could go down to 2-bits per sample, but the encoder I found was old and it had no decoder source. Not to mention… even the 4-bit version of the format sounded worse to me than standard 4-bit DPCM.

That’s when it hit me: why not just use standard 4-bit DPCM? It’s a format I’ve worked with before and fully understand, source code is readily available for an encoder, and I even had some old source code laying around from a DPCM routine that I helped Aurora optimize ages ago. That was enough for me to convince myself to look into it more deeply, despite a couple of big concerns I had about going in this direction.

The way we could get around this is by using an end flag to simply tell the driver that we’re at the end of the sound clip and don’t need to play any more bytes after it. There’s one slight problem, though; that’s damn near impossible to get away with on a delta-based format. If you wanted to designate a byte value of 0x00 as the end flag on an 8-bit raw PCM format, it’s fairly trivial. You can simply give any value of 0x00 in the sound clip a promotion to a value of 0x01 and add an extra 0x00 at the very end. In a delta-based format, however, each value corresponds to the difference from the previous sample to the current one. This means that every delta encoded sample needs every sample that came before it to be correct in order to produce the correct value upon decoding. Therefore, you can't make any byte value an end flag because it could very easily throw off the sample values in a really nasty way. It's impossible to avoid that… or is it? Let’s take a closer look at the delta decoding table:

You’ll notice one of the values is 0x80, and that corresponds to a 4-bit delta value of 8. This is significant because it means having two delta values of 8 in a row (88) would cause the accumulated sample to overflow upon decoding. This caught my eye because ValleyBell’s PCM2DPCM tool has two different anti-overflow algorithms. I figured one of them had to make a byte value of 88 impossible, so naturally, I tested both of them out on a test sound clip and checked the results with a hex editor. The 2nd anti-overflow algorithm didn’t prevent byte values of 88 from being produced, but the 1st one did! This meant that an end flag was possible after all. All I had to do now was swap around decoding values 0x00 and 0x80 around like this:

Swapping the positions of those two values basically just allowed me to use 00 instead of 88 as the end flag value; it’s easier to detect and there’s less paperwork. Armed with this knowledge, I went ahead and ran a test to encode a sound clip with this modified delta table and anti-overflow. The results were… fucked up, somehow. As it turns out, that the anti-overflow algorithm didn’t get along with the new delta table at all. I decided to get around this by converting it with just anti-overflow and using another quick and dirty EASy68K script to swap the 8s and 0s around on each byte after the fact and add the end flag.

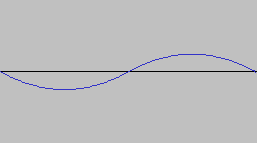

With my major concern put to rest, I moved to squash another minor concern that I had: the noise level. I would say this is a large part of why people perceive DPCM as sounding kinda crappy. A sound clip encoded with DPCM is more or less an approximation of the source signal, and since we only have 16 possible delta values to work with, there’s going to be more signal noise. This is something I knew I couldn’t outright eliminate without turning to a form of ADPCM, but I knew I could reduce how perceivable it was, so I got to work.

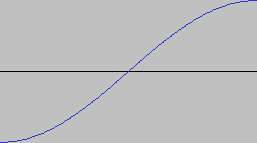

I had already sorta started laying the groundwork for reducing the perception of signal noise back when I worked on VDS, but it was only around now that that started paying off. The 16-bit to 8-bit sound clip conversion script I made back then proved very useful here. Unsurprisingly, the cleaner an 8-bit sound clip is before you convert it, the cleaner the DPCM version will sound after conversion. This made a surprising amount of difference and really makes me wonder if DPCM got a bad rap for the wrong reasons. I still wanted to take it further, though, so I started investigating optimal playback rates. My goal was to figure out at what rates the noise level becomes indistinguishable from the 8-bit equivalent. I also paid particular attention to real MD hardware for these tests, as I suspected the low-pass filter might mask the signal noise a little.

What I ultimately found was, at playback rates of around 20kHz or above, the signal noise differences between 8-bit PCM and 4-bit DPCM were negligible to my ears, especially on real MD hardware and the BlastEm emulator, where the low-pass filter indeed smoothed things out. With that, I had done it. I had a working variation of DPCM that was viable for streaming full songs on the Mega Drive. By my math at the time, one could expect to fit just about 1 minute and 40 seconds of audio per MB of ROM. This means if you dedicated 12 MB worth of ROM mapper banks to your game’s soundtrack, you could fit about 20 minutes of audio within that space, which seemed quite reasonable to me, if a little tight.

It was around this time I shared some of my findings in a few places, including a concept pitch for a ROM hack project where I planned to use the eventual driver. After starting to put together a team for the ROM hack project though… I started attending College again, which removed a significant amount of free time from my schedule. As such, I ultimately had to hit the pause button on both projects. As I started to get the hang of my coursework and other responsibilities though, I found myself ready to develop the driver only a few months later.

At some point early in development (I don’t exactly remember when), I settled on naming the driver SONAR. No, it’s not a clever acronym or even really one that ties into anything related to the technology employed by this driver. In reality, I don’t fully know what drove me to pick the name SONAR aside from maybe the fact that it just sounds cool and powerful. If I had to guess the path of logic my brain took to justify that, it’s probably something flimsy like actual sonar technology being a powerful use of sound waves and that this driver also being a powerful use of sound waves… or some other similarly hot bullshit. Questionable logical origins or not though, the name felt like enough of a keeper for me to whip up a logo for it, and it’s been here to stay ever since.

Another goal I had, in the beginning, was making sure decoding times were equal between the high and low 4-bit delta values. On many DPCM drivers I’ve looked at over the years, the high nybble takes longer to decode each loop than the low nybble, which I figured also contributed to lower quality playback. As such, I tried my best to make sure cycle times were even between decoding each nybble, and with some finesse, I succeeded! For the early versions of SONAR, its decoding times were even for each sample, no matter what. Eventually, however, I conducted a test where I allowed the decoding times to be more uneven and found there was no perceivable drop in quality to my ears. After discovering this, I decided to retire that aspect of SONAR for a higher maximum playback rate of around 24kHz at the time. However, I still conducted future tests at a rate of 20.5kHz; I simply found the option to go higher than that appealing. Further tweaks would eventually cut the max rate down to 21.7kHz, which is where it remains today.

Perhaps my most important goal with SONAR, however, was to make it simple and easy to work with, yet versatile and extremely powerful. Early on, I thought a good way to do this was to allow songs to be split up into individual clips and support a module format to go along with it. The concept was that a song’s module file would be a list of instructions that tell the driver which clips to play in what order. These module instructions are presented as macros for easy editing, readability, and assembling.

Originally, module instructions were translated directly into Z80 instructions that would be executed when a song was ready to play. This turned out to be really wasteful of ROM and Z80 RAM, so I simplified it to a more lightweight system that I call the clip playlist. Each entry in the clip playlist is only 3 bytes long; 1 byte for the clip’s Z80 bank number, and another 2 bytes for the clip’s address within that bank. Additionally, this system supports jumping to earlier or later parts of the clip playlist when the bank value is 0, so things like looping are possible.

I also decided that I wanted an intuitive command system of some kind early on. This would be used for things like adjusting the playback speed on the fly, volume control, fading a song in or out, and pausing, stopping, or resetting the current song. Originally I had planned to let the Z80 take care of processing commands all by itself, but I later realized that it would degrade the playback quality too much to be viable. Instead, the plan is to have the Z80 only check for pitch updates and pausing, while a 68K-side command processor handles the more advanced parts.

Oh, I also originally wanted SONAR to handle sfx processing near the beginning of development, but uh… no. That was naive as hell to even dream of and I’m so glad I didn’t because trying to make that magic happen probably would have broken me.

Of course, there’s still a lot of work to be done. While I’ve roughly prototyped out how a few commands are going to work, I have not started building the 68k-side of SONAR yet. Additionally, I’m still using EASy68K scripts for my encoding workflow, which will not be acceptable for the majority of users, so I need to brush up on my high-level language skills again and write a proper all-in-one tool for it. More than likely, it’ll be written in C# since It doesn’t immediately make me want to tear my long, beautiful hair out.

There are also some additional things I would like to at least investigate and put on the road map prior to the first public release of SONAR. I had a concept for an optimization option for the tool and I want to see how viable it is. The idea is to break a song down into clips the size of the song’s individual beats, combine the duplicates, and stitch it back together, with a proper module file and clip folder provided as the output. You would need to know the exact bpm of the song for this and it may not always save much space, but every byte counts, right?

Perhaps an even more imminent plan is to create a custom version of Aurora’s new upcoming sound driver, Fractal Sound, that’ll fully support SONAR. This version of Fractal Sound will likely be an SFX-only driver, while SONAR handles the music. However, this does mean there might be a small wait on SONAR, even if it’s finished before Fractal Sound is. I would really prefer to release Fractal + SONAR as the full package, but I might change my mind.

I’ll also likely need to hunt down some beta-testers prior to the first release and write a fair amount of comprehensive documentation on how to work the damn thing. I may also put together my own sound test program (similar to the one Aurora’s making for Fractal) that people can just drop their converted songs into, assemble, and have a quick listen to. There’s probably even more that I’m forgetting to mention, too, but after writing this post for the last 3 days, I can barely be arsed to remember right at this moment. :V

All in all, the future is looking bright for this project and I cannot wait to share more of my journey with all of you as I continue to press forward. If you made it this far through my rambling and babbling, I’m both extremely grateful and, quite frankly, impressed that you were able to bear with me this whole time. I don’t exactly make it the easiest to follow everything I say, so if you have any questions or would like something explained more properly, feel free to ask.

Until next time, I wish you all a wonderful day, afternoon, evening, or whatever~

As I’m sure many of you are no doubt aware, I’ve been developing my own audio streaming driver for the Mega Drive since around early August of this year called SONAR. Progress on it has been fairly steady as of late and I’m hoping to have a proper source release of the driver ready by mid-January (that’s not a promise though!). However, I’m not exactly keen on waiting until then to put the word out about it beyond a few littered messages on various Discord servers. Instead, I would like to try a new post format out.

This is gonna be an ongoing thread where I can share my journey of developing this driver with the community and allow others to voice their feedback as time goes on. Even though I call this a dev diary, the format of this thread is really more like a blog crossed over with a discussion post. This format could either turn out to be really great or really messy, but I’m optimistic about it.

I will go ahead and note right up front that this post is gonna be a lot longer than any of the subsequent updates I write since there’s a pretty sizable timeline to cover up to this point. I promise I’ll try to keep this interesting. That said, this post is going to assume a fair bit of prior knowledge on PCM playback and MD sound drivers, so if you’re not versed in those subjects, I can’t guarantee this will all make sense to you.

Oh, and uh, don’t expect super proper English or an overly professional tone from this post, either. As I write this, It’s a very late Sunday night following an absolutely dreadful shift at work, so I’m pretty much going to write this the same way I would explain it to someone if I was just giving them an off-the-cuff summary of my journey thus far. I’ve got enough of the professional essay writing shit to do in college anyway and I get the sense I’d just be wasting everyone's time if I approached this with any other tone but a casual one.

Without further ado, let’s get into it.

The Prologue

So I guess the most natural place to start is actually what I was doing before I started work on SONAR.Back in May of this year, I got the bright idea to try and make my own flavor of 1-bit ADPCM. I don’t fully remember exactly what drove me to do this, but nonetheless, I thought it was worth pursuing. I can, however, confirm that the idea of building a driver dedicated to streaming full songs was nowhere on my radar at the time. I was simply trying to put together a format that would act as an extremely lightweight alternative to 4-bit DPCM that still provided a reasonable level of quality; something you could use the same way you might use MegaPCM or DualPCM, but you know… shittier.

I was also doing this as a means to teach myself EASy68K. I’ve been out of practice with high-level languages for quite some time now, so I thought I could use EASy68K to just coast along with the knowledge I already have of 68k asm to write tools I’d need. This was, in hindsight, really fucking stupid, since the environment EASy68K provides is fairly limited and I’m in a position now where I kinda have to learn a higher-level language anyway (more on that later). I could’ve had a head start on that, but I chose not to. Dumb.

Anyway, I’m not gonna go into detail about what the theory behind this 1-bit format was or how unbearably slow the encoder was because it’s not super relevant to SONAR. All you really need to take away from this time period is that it was called Variable Delta Step (VDS for short) and that it was a colossal failure. I was pretty put off-put by the fact that my experiment had essentially boiled down to waiting 45 minutes at a time for the encoder to spit out something that sounded like a broken fax machine made angry love to a jammed floppy drive, so I pretty much chucked the project in the bin after working on it for about 3 days straight.

By that same token, I wouldn’t say it was a totally pointless endeavor. While working on VDS, I was primarily using Audacity to convert my sound clips from signed 16-bit PCM to unsigned 8-bit PCM. However, there was something I noticed about the conversions that puzzled me quite a bit: the 8-bit samples sounded noisy, even during passages of complete silence. Now, some noisiness is to be expected with the move to a lower fidelity format, and I did understand that going into it.

However, at some point, the thought crossed my mind that maybe Audacity was simply unsigning and then chopping off the lower 8-bits of the sample data and calling it a day, rather than applying any sort of rounding rules to reduce noise in quieter passages. I decided to investigate it further, and sure enough, that’s exactly what it was doing. I responded by showing the program my favorite finger and writing an EASy68K script to handle the conversion properly, and the results were a fair bit cleaner in terms of signal noise.

Sometimes you find small victories in your failures, even if you don’t see them right away.

Back to the Drawing Board

The days following my VDS experiment are a bit of a blur, but I’ll do my best to recount what happened as best I can.Even though I had thrown in the towel on VDS, I was still hungry to do something PCM-related on the Mega Drive. The idea of doing an audio streaming driver sorta just came to me from a place of my earlier ambitions when I first joined SSRG years ago. A streaming driver is something I had wanted to do back then even before I knew a single Z80 opcode. So, without much of a second thought, I kinda went “fuck it” and decided to see how viable this dream from early on really was.

I knew going into this that no matter what PCM format I settled on, my driver was likely going to require the use of a cartridge mapper to be anywhere near viable for a full game OST, and it was something I personally felt okay with. To me, it didn’t seem like it was all that different from what it ended up getting used for in the only MD game to contain a mapper (SSFII), and when looking at the ratio of how much space music took up vs. every other asset on CD-based games of the era, it seemed pretty reasonable by comparison. I understand some people will disagree with that perspective, but this is just how I personally feel about it.

That said, I still wanted to find a PCM format that would make the most out of every byte without sounding like crap. Having learned my lesson with VDS, I decided that creating a format from scratch was not even nearly within my depth, so I needed to find a pre-existing one that was well-documented, had encoding tools that still worked, and source code for a decoder that I’d be able to reference. However… it turned out that there are very very few viable PCM formats out there that meet these criteria. COVOX ADPCM was a tempting option since it could go down to 2-bits per sample, but the encoder I found was old and it had no decoder source. Not to mention… even the 4-bit version of the format sounded worse to me than standard 4-bit DPCM.

That’s when it hit me: why not just use standard 4-bit DPCM? It’s a format I’ve worked with before and fully understand, source code is readily available for an encoder, and I even had some old source code laying around from a DPCM routine that I helped Aurora optimize ages ago. That was enough for me to convince myself to look into it more deeply, despite a couple of big concerns I had about going in this direction.

Tackling the Shortcomings of DPCM

Perhaps my biggest concern with the DPCM format was the fact that it was seemingly impossible to detect the end of a sound clip in an elegant way, Most pre-existing implementations of this format on the MD rely on using a length counter to track how many bytes of the sound clip are left to play. However, due to Z80 limitations, you can really only viably have a length counter at a max value of either 0x7FFF or 0xFFFF depending on how it’s implemented. This is fine when the only types of sound clips you have to worry about playing are drums or voice clips that don’t last more than 2 seconds tops, but a full song is gonna be maaaaaany bytes longer than what such a small counter could possibly hope to account for (no pun intended).The way we could get around this is by using an end flag to simply tell the driver that we’re at the end of the sound clip and don’t need to play any more bytes after it. There’s one slight problem, though; that’s damn near impossible to get away with on a delta-based format. If you wanted to designate a byte value of 0x00 as the end flag on an 8-bit raw PCM format, it’s fairly trivial. You can simply give any value of 0x00 in the sound clip a promotion to a value of 0x01 and add an extra 0x00 at the very end. In a delta-based format, however, each value corresponds to the difference from the previous sample to the current one. This means that every delta encoded sample needs every sample that came before it to be correct in order to produce the correct value upon decoding. Therefore, you can't make any byte value an end flag because it could very easily throw off the sample values in a really nasty way. It's impossible to avoid that… or is it? Let’s take a closer look at the delta decoding table:

0x00, 0x01, 0x02, 0x04, 0x08, 0x10, 0x20, 0x40

0x80, 0xFF, 0xFE, 0xFC, 0xF8, 0xF0, 0xE0, 0xC0You’ll notice one of the values is 0x80, and that corresponds to a 4-bit delta value of 8. This is significant because it means having two delta values of 8 in a row (88) would cause the accumulated sample to overflow upon decoding. This caught my eye because ValleyBell’s PCM2DPCM tool has two different anti-overflow algorithms. I figured one of them had to make a byte value of 88 impossible, so naturally, I tested both of them out on a test sound clip and checked the results with a hex editor. The 2nd anti-overflow algorithm didn’t prevent byte values of 88 from being produced, but the 1st one did! This meant that an end flag was possible after all. All I had to do now was swap around decoding values 0x00 and 0x80 around like this:

0x80, 0x01, 0x02, 0x04, 0x08, 0x10, 0x20, 0x40

0x00, 0xFF, 0xFE, 0xFC, 0xF8, 0xF0, 0xE0, 0xC0Swapping the positions of those two values basically just allowed me to use 00 instead of 88 as the end flag value; it’s easier to detect and there’s less paperwork. Armed with this knowledge, I went ahead and ran a test to encode a sound clip with this modified delta table and anti-overflow. The results were… fucked up, somehow. As it turns out, that the anti-overflow algorithm didn’t get along with the new delta table at all. I decided to get around this by converting it with just anti-overflow and using another quick and dirty EASy68K script to swap the 8s and 0s around on each byte after the fact and add the end flag.

With my major concern put to rest, I moved to squash another minor concern that I had: the noise level. I would say this is a large part of why people perceive DPCM as sounding kinda crappy. A sound clip encoded with DPCM is more or less an approximation of the source signal, and since we only have 16 possible delta values to work with, there’s going to be more signal noise. This is something I knew I couldn’t outright eliminate without turning to a form of ADPCM, but I knew I could reduce how perceivable it was, so I got to work.

I had already sorta started laying the groundwork for reducing the perception of signal noise back when I worked on VDS, but it was only around now that that started paying off. The 16-bit to 8-bit sound clip conversion script I made back then proved very useful here. Unsurprisingly, the cleaner an 8-bit sound clip is before you convert it, the cleaner the DPCM version will sound after conversion. This made a surprising amount of difference and really makes me wonder if DPCM got a bad rap for the wrong reasons. I still wanted to take it further, though, so I started investigating optimal playback rates. My goal was to figure out at what rates the noise level becomes indistinguishable from the 8-bit equivalent. I also paid particular attention to real MD hardware for these tests, as I suspected the low-pass filter might mask the signal noise a little.

What I ultimately found was, at playback rates of around 20kHz or above, the signal noise differences between 8-bit PCM and 4-bit DPCM were negligible to my ears, especially on real MD hardware and the BlastEm emulator, where the low-pass filter indeed smoothed things out. With that, I had done it. I had a working variation of DPCM that was viable for streaming full songs on the Mega Drive. By my math at the time, one could expect to fit just about 1 minute and 40 seconds of audio per MB of ROM. This means if you dedicated 12 MB worth of ROM mapper banks to your game’s soundtrack, you could fit about 20 minutes of audio within that space, which seemed quite reasonable to me, if a little tight.

It was around this time I shared some of my findings in a few places, including a concept pitch for a ROM hack project where I planned to use the eventual driver. After starting to put together a team for the ROM hack project though… I started attending College again, which removed a significant amount of free time from my schedule. As such, I ultimately had to hit the pause button on both projects. As I started to get the hang of my coursework and other responsibilities though, I found myself ready to develop the driver only a few months later.

At some point early in development (I don’t exactly remember when), I settled on naming the driver SONAR. No, it’s not a clever acronym or even really one that ties into anything related to the technology employed by this driver. In reality, I don’t fully know what drove me to pick the name SONAR aside from maybe the fact that it just sounds cool and powerful. If I had to guess the path of logic my brain took to justify that, it’s probably something flimsy like actual sonar technology being a powerful use of sound waves and that this driver also being a powerful use of sound waves… or some other similarly hot bullshit. Questionable logical origins or not though, the name felt like enough of a keeper for me to whip up a logo for it, and it’s been here to stay ever since.

Coding the Damn Thing

After getting the new DPCM format finalized, there were some other goals I needed to focus on while developing SONAR to ensure maximum quality. One of these was DMA protection. For the uninitiated, when a DMA is in progress, the Z80 isn't allowed to access the 68K address space. This means the Z80 will pause if it tries to read samples from ROM during a DMA, which leads to really stuttery playback on drivers that don't take that into account. We can reduce the likelihood of stuttering by reading samples into a buffer faster than we play them back. This allows us to tell the driver to only play from the buffer while a DMA is in progress. Thankfully, the DPCM code I worked on with Aurora was designed to do just that, so designing the playback loop was mostly a matter of reworking that code to fit the new format.Another goal I had, in the beginning, was making sure decoding times were equal between the high and low 4-bit delta values. On many DPCM drivers I’ve looked at over the years, the high nybble takes longer to decode each loop than the low nybble, which I figured also contributed to lower quality playback. As such, I tried my best to make sure cycle times were even between decoding each nybble, and with some finesse, I succeeded! For the early versions of SONAR, its decoding times were even for each sample, no matter what. Eventually, however, I conducted a test where I allowed the decoding times to be more uneven and found there was no perceivable drop in quality to my ears. After discovering this, I decided to retire that aspect of SONAR for a higher maximum playback rate of around 24kHz at the time. However, I still conducted future tests at a rate of 20.5kHz; I simply found the option to go higher than that appealing. Further tweaks would eventually cut the max rate down to 21.7kHz, which is where it remains today.

Perhaps my most important goal with SONAR, however, was to make it simple and easy to work with, yet versatile and extremely powerful. Early on, I thought a good way to do this was to allow songs to be split up into individual clips and support a module format to go along with it. The concept was that a song’s module file would be a list of instructions that tell the driver which clips to play in what order. These module instructions are presented as macros for easy editing, readability, and assembling.

Originally, module instructions were translated directly into Z80 instructions that would be executed when a song was ready to play. This turned out to be really wasteful of ROM and Z80 RAM, so I simplified it to a more lightweight system that I call the clip playlist. Each entry in the clip playlist is only 3 bytes long; 1 byte for the clip’s Z80 bank number, and another 2 bytes for the clip’s address within that bank. Additionally, this system supports jumping to earlier or later parts of the clip playlist when the bank value is 0, so things like looping are possible.

I also decided that I wanted an intuitive command system of some kind early on. This would be used for things like adjusting the playback speed on the fly, volume control, fading a song in or out, and pausing, stopping, or resetting the current song. Originally I had planned to let the Z80 take care of processing commands all by itself, but I later realized that it would degrade the playback quality too much to be viable. Instead, the plan is to have the Z80 only check for pitch updates and pausing, while a 68K-side command processor handles the more advanced parts.

Oh, I also originally wanted SONAR to handle sfx processing near the beginning of development, but uh… no. That was naive as hell to even dream of and I’m so glad I didn’t because trying to make that magic happen probably would have broken me.

Where Development Stands Now

Development has been a little sparse this last week or so, what with Turkey Day and all that happening here in the states but other than that, it’s going fairly smoothly.Of course, there’s still a lot of work to be done. While I’ve roughly prototyped out how a few commands are going to work, I have not started building the 68k-side of SONAR yet. Additionally, I’m still using EASy68K scripts for my encoding workflow, which will not be acceptable for the majority of users, so I need to brush up on my high-level language skills again and write a proper all-in-one tool for it. More than likely, it’ll be written in C# since It doesn’t immediately make me want to tear my long, beautiful hair out.

There are also some additional things I would like to at least investigate and put on the road map prior to the first public release of SONAR. I had a concept for an optimization option for the tool and I want to see how viable it is. The idea is to break a song down into clips the size of the song’s individual beats, combine the duplicates, and stitch it back together, with a proper module file and clip folder provided as the output. You would need to know the exact bpm of the song for this and it may not always save much space, but every byte counts, right?

Perhaps an even more imminent plan is to create a custom version of Aurora’s new upcoming sound driver, Fractal Sound, that’ll fully support SONAR. This version of Fractal Sound will likely be an SFX-only driver, while SONAR handles the music. However, this does mean there might be a small wait on SONAR, even if it’s finished before Fractal Sound is. I would really prefer to release Fractal + SONAR as the full package, but I might change my mind.

I’ll also likely need to hunt down some beta-testers prior to the first release and write a fair amount of comprehensive documentation on how to work the damn thing. I may also put together my own sound test program (similar to the one Aurora’s making for Fractal) that people can just drop their converted songs into, assemble, and have a quick listen to. There’s probably even more that I’m forgetting to mention, too, but after writing this post for the last 3 days, I can barely be arsed to remember right at this moment. :V

All in all, the future is looking bright for this project and I cannot wait to share more of my journey with all of you as I continue to press forward. If you made it this far through my rambling and babbling, I’m both extremely grateful and, quite frankly, impressed that you were able to bear with me this whole time. I don’t exactly make it the easiest to follow everything I say, so if you have any questions or would like something explained more properly, feel free to ask.

Until next time, I wish you all a wonderful day, afternoon, evening, or whatever~

Last edited: